Screens are just another environment. We're teaching AI to navigate them.

VLA models transformed robotics by learning from video instead of static images. The same paradigm shift is coming for computer use. We're building the training data for that generation.

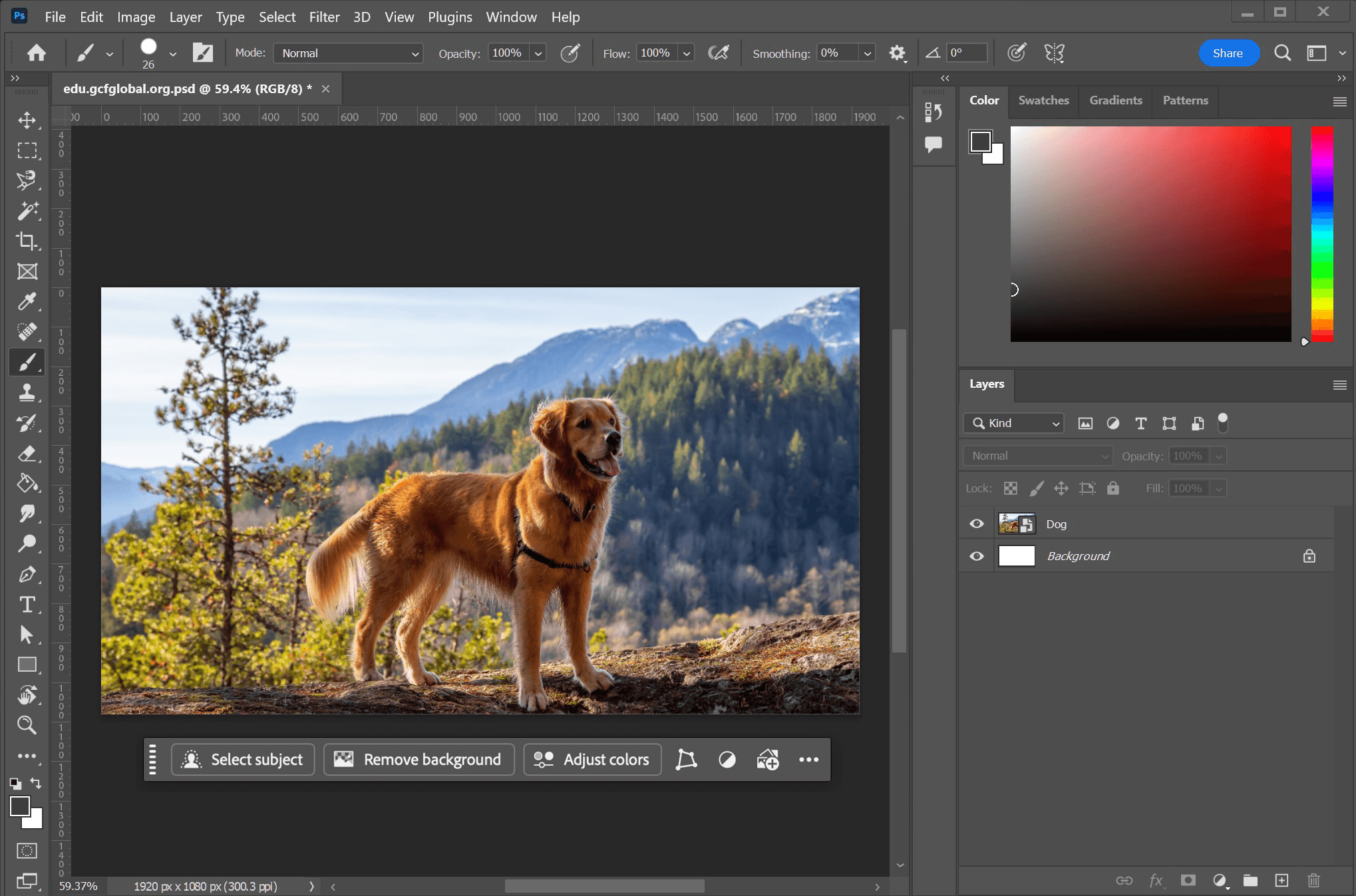

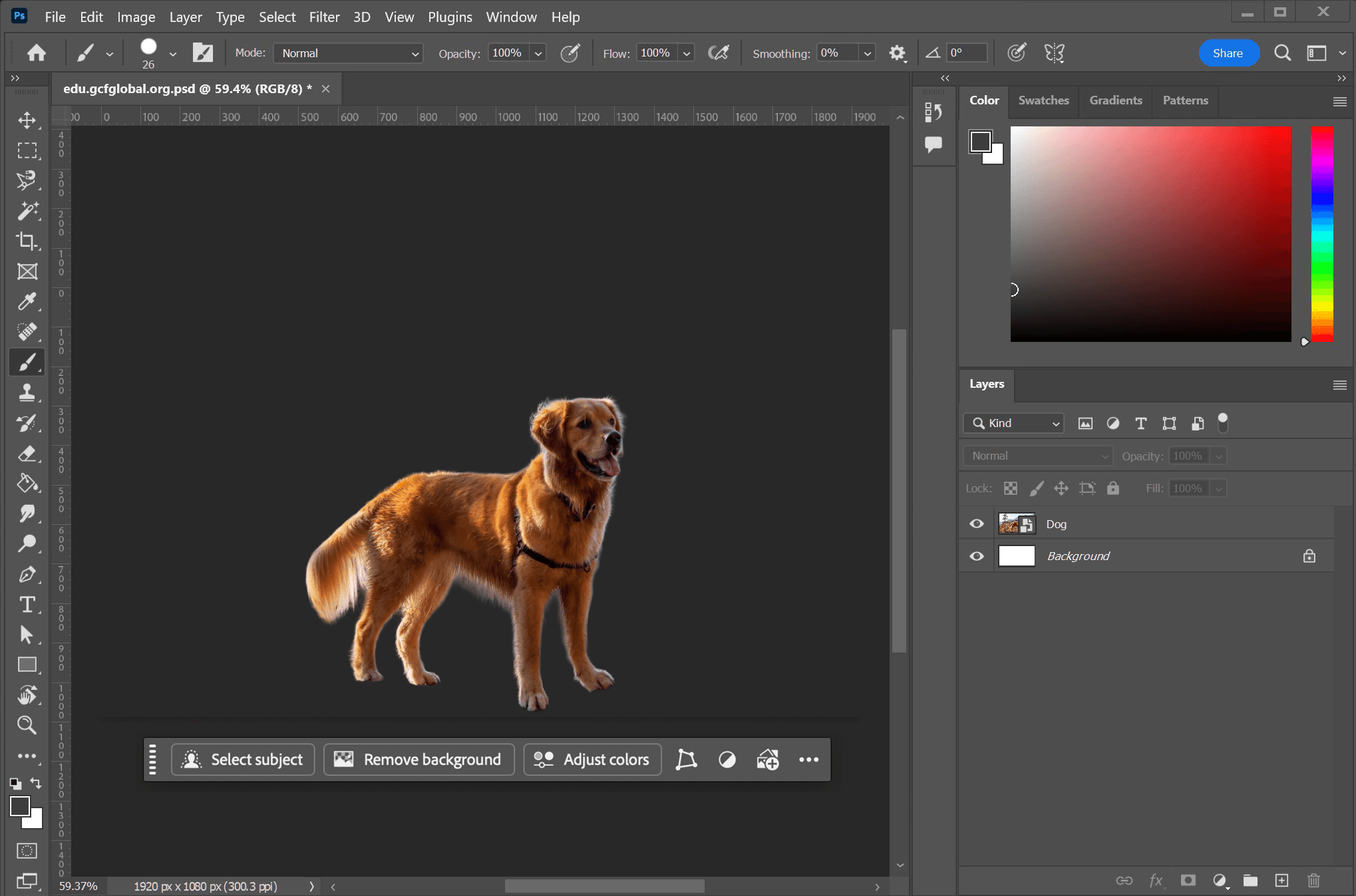

Photoshop workflow: Select Subject → Remove Background → Continue editing

What VLA did for robotics, we're doing for computer use.

Early robot learning processed static images. It didn't work. VLA models—video in, actions out—changed everything. Robots learned temporal patterns, error correction, fluid motion.

Current computer use agents process screenshots. Same problem. Same solution.

Current Paradigm

Screenshot → Reason → Click

Static frames. No state. 3-10 seconds per action. Breaks on anything dynamic.

Next Paradigm

Video → Continuous Action

Temporal perception. State tracking. Real-time. Learns from human demonstrations.

The models will come from Anthropic, OpenAI, the frontier labs. The training data will come from us. We're not competing on models. We're building the data infrastructure for the entire category.

Models can't automate what they've never seen.

The internet has documentation and tutorials. It doesn't have expert demonstrations. The gap isn't model capability—it's training data format and coverage.

No Long-Horizon Data

Tutorials show 5-step tasks. Real work is 200-step sessions with context, decisions, corrections.

No Internal Workflows

Your Salesforce config, admin panels, enterprise tools—zero coverage on the internet.

Wrong Format

Current datasets are screenshot-based. Video-native models need video-native data.

Procedural ≠ Declarative

You can't learn workflows from documentation. You need demonstrations. That's what we collect.

Screen recording is pixels. We add the semantic layer.

Raw video isn't training data. We capture the full signal: what happened, what it means, and why.

We scale collection through a global contributor network — professionals earn tokens for recording their workflows. Expert demonstrations, not mechanical turk.

Every job on a computer can be automated.

Full digital automation requires two things: models that can operate computers reliably, and training data for specific workflows. The models are coming. The data doesn't exist.

We're building the data layer for the automation of all digital work.

Useful today: workflow documentation, internal knowledge, onboarding. Essential tomorrow: training data for automation.

We built computer use agents. We know what's missing.

Shirley Yan

- Built HoverGPT — computer use agent before Anthropic's demo

- AI dashcam & sensor data pipelines at Motive

- UC Berkeley Computer Science

- Angel investor — YC co-investments

Eric Liang

- Anthropic — RLHF Training for Claude Skills

- Apple Vision Pro — Spatial Understanding Tech Lead

- Top 10 globally in macOS system internals

- 2X Founder — YC W24, Aesop Labs

The window is now.

We're partnering with AI labs and enterprises building the next generation of computer use. Early access available.

The last human demonstrations.